Artificial intelligence (AI) chatbots—like ChatGPT and Bard AI—are significant and powerful technologies, but they struggle with accurately citing data and facts

Key Details

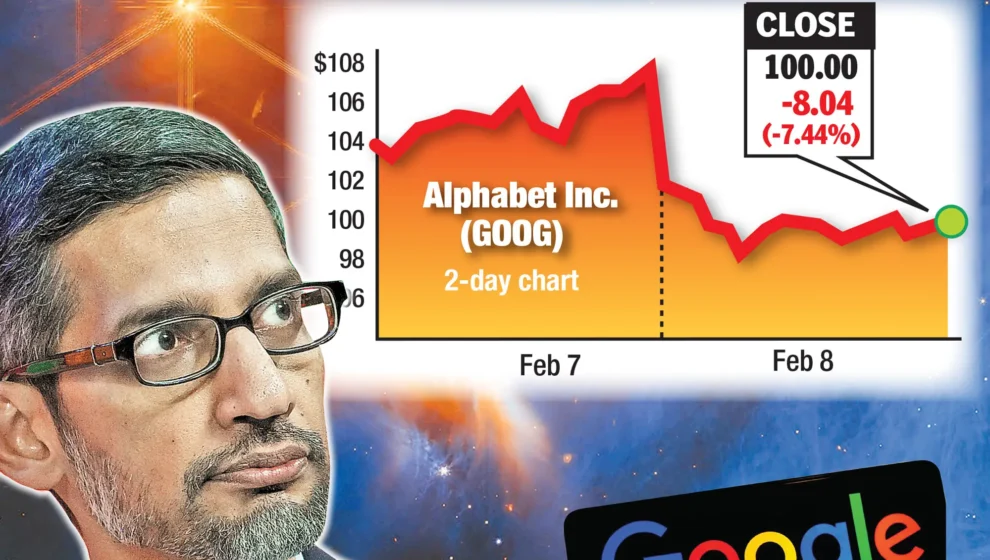

- Google parent Alphabet lost $100 billion in valuation on February 8 following a demonstration of Bard AI, where the AI incorrectly stated the James Webb Space Telescope satellite had first photographed an extra-solar planet, not the European VLT satellite, in 2004.

- Beta-testers for the new ChatGPT-powered Bing search engine, by Microsoft, noticed the following week that the chatbot had the same issue, getting multiple answers completely incorrect and being unable to distinguish between fact and fiction.

- In a presentation last week, Microsoft executives announced a new suite of AI-powered features. They defended the technology as “usefully wrong,” claiming its new “Copilot” software is still powerful and dismissed small mistakes as easily fixed.

Why It’s Important

AIs have to be trained on datasets in order to learn how to build convincing language models. ChatGPT currently does not have access to a dataset after December 2021, meaning it cannot answer questions on current events, trends, or scientific developments. As the noted demonstrations show, the existing chatbots are struggling with correct citations.

This has placed a severe limitation on the current software’s applicability. While these results haven’t slowed the ongoing AI arms race, they have cut against promises that chatbots could answer complex questions in an easy-to-understand way for the benefit of users. It is unclear whether the technology will be useful for accurate citations.

Breaking Down The Issue

Dr. Jie Wang is a professor of computer science at the University of Massachusetts Amherst. His work in AI has focused on downstream applications of language models and data modeling. He tells Leaders Media that the citation issue is not so much a technical problem as a limitation of resources and training.

“Data gathering from the internet is often done by deploying web crawlers, which are programs to automatically visit websites and collect web publications, news, blogs, academic papers, and images, among other things. These data are then processed and turned into the pure text form suitable as input for training neural networks,” says Wang.

Bard AI, ChatGPT, and GPT-4 are language models trained on large datasets, and the quality of their respective answers will depend on the quality of those training models. Those early models are bound to make many mistakes based on the quality and volume of information sampled. As Wang notes, it is possible to train a chatbot to answer questions purposefully incorrectly or for two chatbots trained on the same information to come to different conclusions.

These are issues that the development teams behind ChatGPT and Bard AI are fully aware of. They could resolve some of these issues by expanding the training dataset with more up-to-date information, but this comes at the expense of extreme costs. Having a specific data cutoff and focusing the training on then-current or obsolete information is a generally accepted practice.

“Keep in mind that training a large model is extremely costly. It takes a long time and a lot of powerful machines to train a large model. For economic reasons, model updates are not done frequently. A company can update its model with new data at any time. The only concern is whether it is worth doing because of the time and money needed to complete this task,” says Wang.

An Optimistic Outlook

Even with the noted limitations, the technology has overall proved disruptive, with schools and universities already needing to roll out technology that can scan essays for a text generated in ChatGPT. As the online proofreading website Scribbr notes, no official citation format for ChatGPT has been released by any of the official style guides. Citation authorities are still uncertain if allowing ChatGPT-sourced information will be appropriate.

Dr. Wang speaks optimistically about the potential of AI. One of the applications he is exploring is improving K-12 students’ reading comprehension skills, believing that more powerful AI creates possibilities for helping strengthen students.

“ChatGPT is a double-edged sword. On the one hand, schools and universities should ban students from using ChatGPT to produce term papers and computer programs in their coursework, among other things. On the other hand, ChatGPT can also be used to help improve teaching and research in a positive way,” he says.